The AI Dilemma: Fine-Tuning vs Prompting

in Modern LLMs

Should we rely on prompting, or should we invest in fine-tuning the model?

Both approaches can help you get better results from an LLM, but they serve different needs and come with different trade-offs. Let’s explore what each approach involves, when to use them, and how to make the right choice for your project.

What is Prompt-Engineering

Prompt Engineering in Action: A Simple Example

To understand the importance of prompt engineering, let’s look at a simple “before and after” scenario. You’ll see how a little more detail can transform a generic request into a targeted, high-quality output.

Before: The general Prompt

Prompt: Generate a poem about nature. If we ask an LLM to simply "generate a poem on nature," it will give us a general poem, likely making use of a few common elements like trees, rivers, or the sky. The result will be a basic, and somewhat generic, piece of writing that lacks a specific purpose or unique voice. The output is accurate in a broad sense, but it's not very intentional about a specific need.

After:The Concise Prompt

Prompt: Act as an expert in nature poet inspired by the works of Rabindranath Tagore.

Write a four-line poem about the sunrise on a calm lake. The poem should start with the line, “A golden light first kisses the water.” Make the poem feel joyful and healing. Focus on the reflection of the light and end by describing the feeling of hope.

This engineered prompt produces a poem that is not only "accurate" but also meaningful, intentional, and perfectly aligned with our creative vision. It shows that the quality of your output is a direct reflection of the quality of your input.

Popular Prompting Strategies

- Zero-Shot Prompting: This is the most direct approach. You provide the model with a task and no examples. The model relies purely on its pre-trained knowledge to generate a response.

- Few-Shot Prompting: You provide the model with a few examples of the input-output pairs you want to achieve, followed by your new request. This helps the model understand the specific format, style, or pattern you're looking for, guiding it to a more accurate and consistent response.

- Chain-of-Thought Prompting: This powerful technique encourages the model to "think out loud" by asking it to explain its reasoning step-by-step before providing a final answer. This is particularly effective for complex problems, such as mathematical calculations or multi-part logical questions, as it helps the model break down the task and reduce errors.

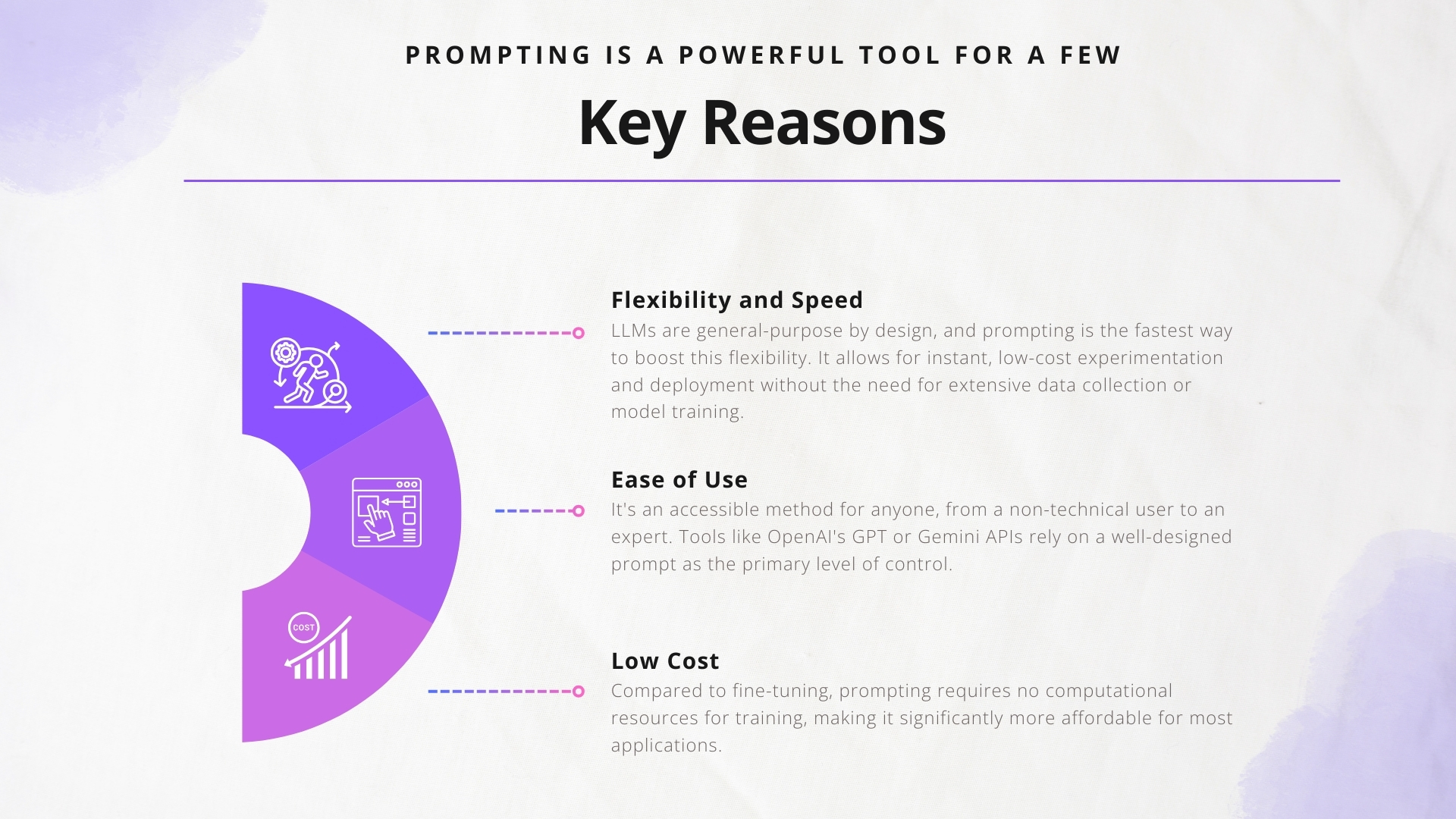

Why Prompting Matters for LLMs

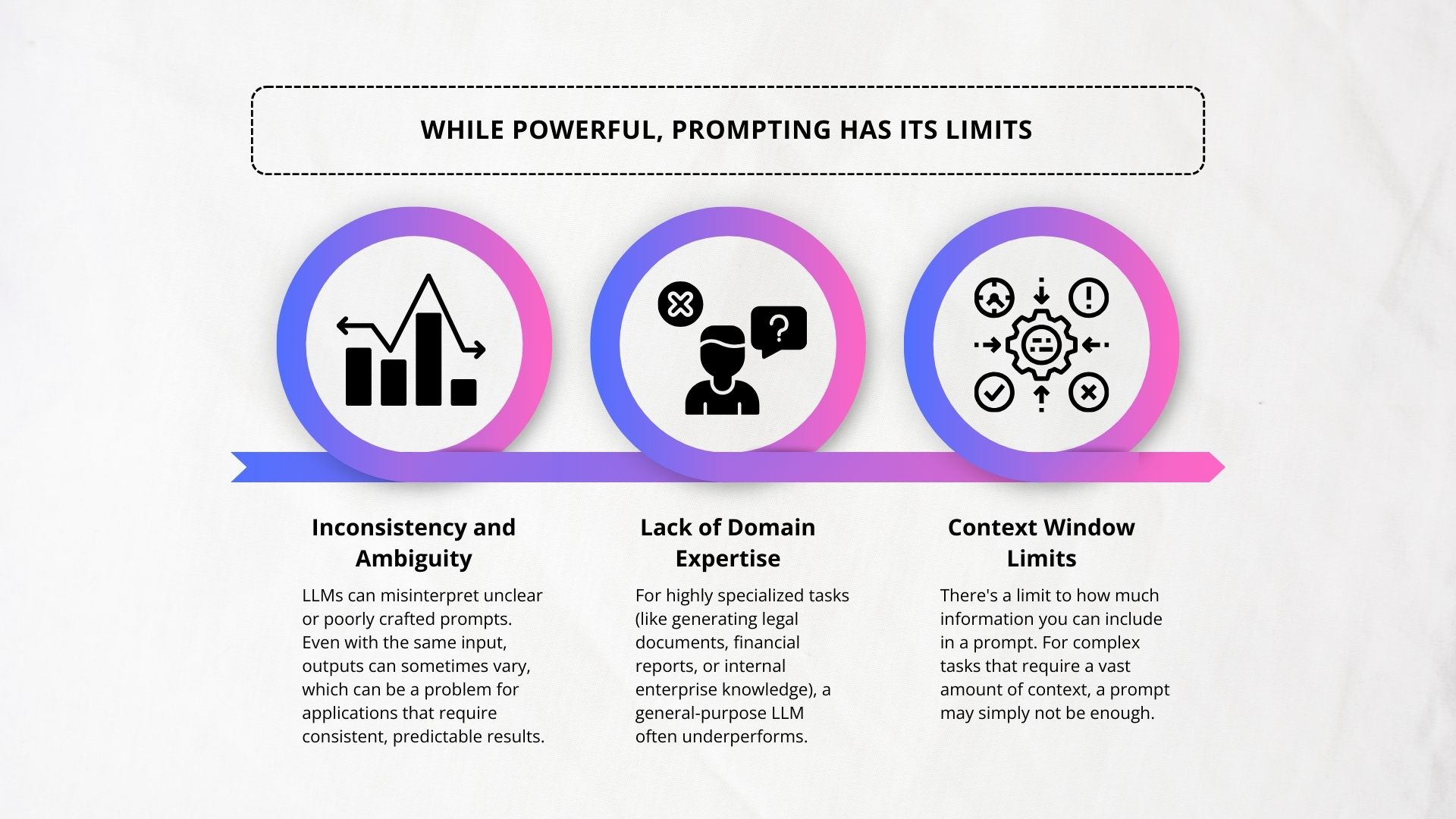

The Limitations of Prompting

Fine-Tuning: Teaching the Model What You Need

- Full Fine-Tuning: is like asking the artist to completely re-learn their craft from scratch, retraining every technique and skill they must only draw blueprints. This is an expensive, time-consuming process that often isn't worth the effort. It's powerful, but it's overkill for most tasks.

- PEFT (Parameter-Efficient Fine-Tuning): A smarter way—give the artist new tools and a sketchbook for blueprints. They keep core skills and adapt quickly, like LoRA adding small light-weight layers of knowledge without changing the whole model.

Why Fine-Tuning Matters

When prompting just isn’t enough, fine-tuning offers a new level of performance and control.

Let’s Understand this with a simple example: The E-commerce Chatbot

Assume you start with a general LLM for your chatbot. When asked, “How do I return this jacket?” it gives a generic reply like “check the return policy online.” When asked about the “Everest” jacket, it may guess or invent details, since it lacks product-specific knowledge.

By fine-tuning your catalog, return policies, support transcripts, and brand guides, the chatbot evolves from a generic assistant into a precise, reliable, and on-brand expert about something prompting alone cannot achieve.

- New Knowledge: It knows your products, so it can explain the exact materials and features of the Everest jacket.

- Reliability: Instead of vague policies, it gives step-by-step return instructions tailored to your company.

- Customization: It speaks in your brand’s voice, whether casual or professional, ensuring every interaction feels on-brand.

The Trade-Offs of Fine-Tuning

- Data Requirement: Fine-tuning needs a well-prepared, labeled dataset. Collecting, cleaning, and maintaining this data takes significant time and resources, and the model’s performance is only as good as the data quality.

- Higher Cost: Training and hosting fine-tuned models require substantial GPU computing, making it far more expensive than simple API-based prompting.

- Greater Complexity: Unlike prompting, you’re responsible for managing the model itself, handling versioning, retraining with new data, and maintaining deployment infrastructure.

Why Both Fine-Tuning and Prompt Engineering Matter in LLM Development

What Happens If You Ignore One (or Both)

-

Ignoring Prompting:

Even with a well-trained model, responses may become unpredictable, generic, or vague. Without effective prompting, you lose control over how the model interprets inputs, making it difficult to guide outputs toward the desired format or tone. -

Ignoring Fine-Tuning:

The model struggles with domain-specific vocabulary, business logic, or specialized tasks. Over-reliance on prompting alone becomes inefficient, and performance drops significantly on structured or high-stakes tasks such as SQL generation, compliance checks, or legal summaries. -

Ignoring Both:

The model remains generic, delivering inconsistent and unreliable results. In enterprise settings, this can lead to outputs that appear intelligent on the surface but fail under pressure—making the system unsuitable for real-world deployment.